def main(db_fname):

print('START!')

db = h5py.File(db_fname, 'r')

dsets = sorted(db['data'].keys())

print ("total number of images : ", colorize(Color.RED, len(dsets), highlight=True))

print()

for num ,k in enumerate(dsets):

#add txt

f = open('{}/gt_img_{}.txt'.format(GT_PATH,num),'w')

rgb = db['data'][k][...]

charBB = db['data'][k].attrs['charBB']

wordBB = db['data'][k].attrs['wordBB']

txt = db['data'][k].attrs['txt']

#print('wordBB:',wordBB)

#print('txt:',txt)

#print('k',k)

#f.write()

viz_textbb(rgb, [charBB], wordBB, num=num, index=k, txt=txt)

f.close()

print( "image name : ", colorize(Color.RED, k, bold=True))

#print (" ** no. of chars : ", colorize(Color.YELLOW, charBB.shape[-1]))

print (" ** no. of words : ", colorize(Color.YELLOW, wordBB.shape[-1]))

print (" ** text : ", colorize(Color.GREEN, txt))

# print " ** text : ", colorize(Color.GREEN, txt.encode('utf-8'))

# if 'q' in raw_input("next? ('q' to exit) : "):

# break

db.close()1. Taget

text.py

for i_idx, image in enumerate(self.images_root.glob('*.jpg')):

# image = pathlib.Path('/data/ocr/det/icdar2015/detection/train/imgs/img_756.jpg')

# gt = pathlib.Path('/data/ocr/det/icdar2015/detection/train/gt/gt_img_756.txt')

gt = self.gt_root / image.with_name('gt_{}'.format(image.stem)).with_suffix('.txt').name

with gt.open(mode='r') as f:

bboxes = []

texts = []

for line in f:

text = line.strip('\ufeff').strip('\xef\xbb\xbf').strip().split(',')

x1, y1, x2, y2, x3, y3, x4, y4 = list(map(float, text[:8]))

bbox = [[x1, y1], [x3, y3]]

transcript = text[8]

if transcript == '###' and self.training:

continue

bboxes.append({"image_id":i_idx, "category_id":1, "bbox":bbox}) #label 1 for text, 0 for background

texts.append(transcript)

if len(bboxes) > 0:

bboxes = np.array(bboxes)

all_bboxs.append(bboxes)

all_texts.append(texts)

all_images.append(image)

all_ids.append(i_idx)

return all_images, all_bboxs, all_texts, all_ids

sample gt

data / tain / gt , imgs

SAMPLE

gt_img_1.txt

377,117,463,117,465,130,378,130,Genaxis Theatre

493,115,519,115,519,131,493,131,[06]

374,155,409,155,409,170,374,170,###

492,151,551,151,551,170,492,170,62-03

376,198,422,198,422,212,376,212,Carpark

494,190,539,189,539,205,494,206,###

374,1,494,0,492,85,372,86,###

2. SynthText - kr

def main(db_fname):

db = h5py.File(db_fname, 'r')

dsets = sorted(db['data'].keys())

print ("total number of images : ", colorize(Color.RED, len(dsets), highlight=True))

print()

for k in dsets:

rgb = db['data'][k][...]

charBB = db['data'][k].attrs['charBB']

wordBB = db['data'][k].attrs['wordBB']

txt = db['data'][k].attrs['txt']

viz_textbb(rgb, [charBB], wordBB, index=k, txt=txt)

print( "image name : ", colorize(Color.RED, k, bold=True))

print (" ** no. of chars : ", colorize(Color.YELLOW, charBB.shape[-1]))

print (" ** no. of words : ", colorize(Color.YELLOW, wordBB.shape[-1]))

print (" ** text : ", colorize(Color.GREEN, txt))h5 file path : gen/dataset_sample.h5

total number of images : 1

wordBB: [[[208.6069 133.4994 101.2383 84.159134 93.3638 ]

[395.68124 539.3367 182.21889 198.9645 172.35709 ]

[393.8134 539.36487 181.0756 197.66335 170.69101 ]

[206.73904 133.5276 100.09502 82.85799 91.69772 ]]

[[378.6737 426.93433 247.17474 97.715576 178.44614 ]

[385.53638 426.81396 249.13928 101.58473 180.8259 ]

[436.45404 521.8998 296.2671 140.1925 236.12885 ]

[429.59137 522.02014 294.30255 136.32335 233.74908 ]]]

txt: ['헤이브릴' '충저우해협' '된지' '토반묘' '삼작']

k ant+hill_10.jpg_0377,117,463,117,465,130,378,130,Genaxis Theatre

493,115,519,115,519,131,493,131,[06]

374,155,409,155,409,170,374,170,###

492,151,551,151,551,170,492,170,62-03

376,198,422,198,422,212,376,212,Carpark

494,190,539,189,539,205,494,206,###

374,1,494,0,492,85,372,86,###visualize_results.py

# split words and create jpg files

def _tojpg(text_im, polygon, index, k, typ):

img = Image.fromarray(text_im, 'RGB')

img.save(IMG_PATH+'/image/'+k+'.jpg')

# convert to numpy (for convenience)

imArray = np.asarray(img)

maskIm = Image.new('L', (imArray.shape[1], imArray.shape[0]), 0)

ImageDraw.Draw(maskIm).polygon(polygon, outline=1, fill=1)

mask = np.array(maskIm)

# assemble new image (uint8: 0-255)

newImArray = np.empty(imArray.shape,dtype='uint8')

# colors (three first columns, RGB)

newImArray[:,:,:3] = imArray[:,:,:3]

# back to Image from numpy

newIm = Image.fromarray(newImArray, "RGB")

x_min = min([x[0] for x in polygon])

x_max = max([x[0] for x in polygon])

y_min = min([x[1] for x in polygon])

y_max = max([x[1] for x in polygon])

newIm = newIm.crop((x_min, y_min, x_max, y_max))

if typ == "char":

newIm.save(IMG_PATH+"/characters/"+typ+"_"+str(index)+".jpg")

f1 = open('ch_coords.txt', 'a')

f1.write(typ+str(index)+".jpg"+"\t"+str(x_min)+","+str(y_min)+","+str(x_max)+","+str(y_max)+"\n")

elif typ == "word":

newIm.save(IMG_PATH+"/words/"+typ+"_"+str(index)+".jpg")

f2 = open('wd_coords.txt', 'a')

f2.write(typ+str(index)+".jpg"+"\t"+str(x_min)+","+str(y_min)+","+str(x_max)+","+str(y_max)+"\n")visualize_result.py

def viz_textbb(fignum,text_im, bb_list,alpha=1.0):

"""

text_im : image containing text

bb_list : list of 2x4xn_i boundinb-box matrices

"""

plt.close(fignum)

plt.figure(fignum)

plt.imshow(text_im)

#plt.hold(True)

H,W = text_im.shape[:2]

for i in range(len(bb_list)):

bbs = bb_list[i]

ni = bbs.shape[-1]

for j in range(ni):

bb = bbs[:,:,j]

bb = np.c_[bb,bb[:,0]]

plt.plot(bb[0,:], bb[1,:], 'r', linewidth=2, alpha=alpha)

plt.gca().set_xlim([0,W-1])

plt.gca().set_ylim([H-1,0])

plt.show(block=False)gt_ synthgen.py

#def coords 에서 tmp 아래와 같이 출력

#tmp=list(zip(x_list, y_list))

[(235, 29), (299, 33), (297, 62), (232, 58)]

[(230, 70), (473, 121), (453, 215), (210, 164)]

[(129, 445), (244, 445), (244, 508), (129, 508)]

[(135, 10), (210, 10), (210, 41), (135, 41)]

[(483, 5), (586, 11), (582, 78), (480, 73)]

[(57, 499), (123, 485), (128, 510), (62, 523)]

[(217, 6), (279, 6), (279, 34), (217, 33)]

[(209, 181), (372, 199), (367, 247), (203, 229)]

[(4, 67), (115, 68), (115, 131), (4, 130)]

[(475, 84), (549, 87), (548, 126), (473, 123)]

[(5, 481), (83, 466), (88, 492), (10, 506)]

[(369, 220), (424, 226), (421, 253), (366, 247)]

[(73, 274), (199, 274), (199, 322), (73, 321)]

[(500, 129), (594, 132), (593, 163), (499, 159)]

[(4, 454), (94, 436), (98, 459), (9, 476)]

[(335, 267), (395, 287), (387, 314), (326, 293)]

[(147, 319), (188, 320), (188, 341), (147, 341)]

[(423, 10), (483, 13), (481, 52), (420, 49)]

[(298, 46), (334, 48), (334, 73), (297, 72)]

[(409, 192), (454, 197), (451, 220), (406, 214)]

[(277, 398), (522, 399), (521, 509), (276, 508)]

[(548, 73), (591, 75), (589, 113), (546, 111)]

f = open('{}/gt_img_{}.txt'.format(GT_PATH,num),'w')

IMG_PATH ='/content/drive/Shareddrives/OCR_Transformer/SynthText_kr/imgs'

GT_PATH = '/content/drive/Shareddrives/OCR_Transformer/SynthText_kr/gt'

h5_PATH ='gen/dataset_sample.h5' #'gen/dataset_kr.h5'RESULT (visualize_result.py)

"""

Visualize the generated localization synthetic

data stored in h5 data-bases

"""

from __future__ import division

import os

import os.path as osp

import numpy as np

import matplotlib

matplotlib.use('Agg')

import matplotlib.pyplot as plt

import h5py

import cv2

from common import *

import itertools

from PIL import Image, ImageDraw

import re

import json

# initialize index

num = 1

char_ind = 0

word_ind = 0

def viz_textbb(text_im, charBB_list, wordBB, num, index, txt, alpha=1.0):

"""

text_im : image containing text

charBB_list : list of 2x4xn_i bounding-box matrices

wordBB : 2x4xm matrix of word coordinates

"""

#plt.close(1)

#plt.figure(1)

#plt.imshow(text_im)

img = Image.fromarray(text_im, 'RGB')

img.save('{}/img_{}.jpg'.format(IMG_PATH,num))

#plt.hold(True)

H,W = text_im.shape[:2]

# plot the word-BB:

txt_index = 0 #txt 순서대로 출력

for i in range(wordBB.shape[-1]):

bb = wordBB[:,:,i]

bb = np.c_[bb,bb[:,0]]

#plt.plot(bb[0,:], bb[1,:], 'g', alpha=alpha)

coords(bb[0,:], bb[1,:], num, index, text_im, txt[txt_index])

txt_index = txt_index +1

#_tojpg(text_im, bb, index, num, index, typ='word')

# visualize the indiv vertices:

#vcol = ['r','g','b','k']

#for j in range(4):

# plt.scatter(bb[0,j],bb[1,j],color=vcol[j])

# plot the character-BB:

#for i in range(len(charBB_list)):

# bbs = charBB_list[i]

# ni = bbs.shape[-1]

# for j in range(ni):

# bb = bbs[:,:,j]

# bb = np.c_[bb,bb[:,0]]

# plt.plot(bb[0,:], bb[1,:], 'r', alpha=alpha/2)

# coords(bb[0,:], bb[1,:], index, text_im, "char")

# generate image files and coordinate files

def coords(x_list, y_list, num, k, img, word):

tmp = []

global char_ind

global word_ind

# remove alpha

x_list=x_list[:-1]

y_list=y_list[:-1]

# change datatype

x_list=[int(x) for x in x_list]

y_list=[int(y) for y in y_list]

# generate coords list

tmp = list(zip(x_list, y_list))

with open('{}/gt_img_{}.txt'.format(GT_PATH,num),'a') as f :

for i,xy in enumerate(tmp) :

x,y = xy

f.write('{},{},'.format(x,y))

if (i+1) % 4 == 0 :

f.write('{}\n'.format(word) )

#coords to image file

k=k.split('.')[0]

word_ind = word_ind + 1

#_tojpg(img, tmp, word_ind, num,k, word)

# split words and create jpg files

def _tojpg(text_im, polygon, index, num, k, word):

img = Image.fromarray(text_im, 'RGB')

img.save(IMG_PATH+'/img_{}.jpg'.format(num))

# convert to numpy (for convenience)

imArray = np.asarray(img)

maskIm = Image.new('L', (imArray.shape[1], imArray.shape[0]), 0)

ImageDraw.Draw(maskIm).polygon(polygon, outline=1, fill=1)

mask = np.array(maskIm)

# assemble new image (uint8: 0-255)

newImArray = np.empty(imArray.shape,dtype='uint8')

# colors (three first columns, RGB)

newImArray[:,:,:3] = imArray[:,:,:3]

# back to Image from numpy

newIm = Image.fromarray(newImArray, "RGB")

x_min = min([x[0] for x in polygon])

x_max = max([x[0] for x in polygon])

y_min = min([x[1] for x in polygon])

y_max = max([x[1] for x in polygon])

#print('**')

#print(typ+str(index)+".jpg"+"\t"+str(x_min)+","+str(y_min)+","+str(x_max)+","+str(y_max)+"\n")

newIm = newIm.crop((x_min, y_min, x_max, y_max))

newIm.save(IMG_PATH+"/words/"+"testtt_"+str(index)+".jpg") #save image

f2 = open('wd_coords.txt', 'a')

f2.write(typ+str(index)+".jpg"+"\t"+str(x_min)+","+str(y_min)+","+str(x_max)+","+str(y_max)+"\n")

def main(db_fname):

db = h5py.File(db_fname, 'r')

dsets = sorted(db['data'].keys())

print ("total number of images : ", colorize(Color.RED, len(dsets), highlight=True))

print()

for num ,k in enumerate(dsets):

#add txt

f = open('{}/gt_img_{}.txt'.format(GT_PATH,num),'w')

rgb = db['data'][k][...]

charBB = db['data'][k].attrs['charBB']

wordBB = db['data'][k].attrs['wordBB']

txt = db['data'][k].attrs['txt']

#print('wordBB:',wordBB)

#print('txt:',txt)

#print('k',k)

#f.write()

viz_textbb(rgb, [charBB], wordBB, num=num, index=k, txt=txt)

f.close()

print( "image name : ", colorize(Color.RED, k, bold=True))

#print (" ** no. of chars : ", colorize(Color.YELLOW, charBB.shape[-1]))

print (" ** no. of words : ", colorize(Color.YELLOW, wordBB.shape[-1]))

print (" ** text : ", colorize(Color.GREEN, txt))

# print " ** text : ", colorize(Color.GREEN, txt.encode('utf-8'))

# if 'q' in raw_input("next? ('q' to exit) : "):

# break

db.close()

if __name__=='__main__':

IMG_PATH ='/content/drive/Shareddrives/OCR_Transformer/SynthText_kr/imgs'

GT_PATH = '/content/drive/Shareddrives/OCR_Transformer/SynthText_kr/gt'

h5_PATH ='gen/dataset_kr.h5' #'gen/dataset_kr.h5'

if os.path.isdir(IMG_PATH) == False :

os.mkdir(IMG_PATH)

if os.path.isdir(GT_PATH) == False :

os.mkdir(GT_PATH)

print('h5 file path : ',h5_PATH)

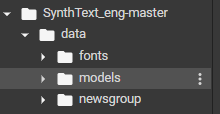

main(h5_PATH)3. SynthText - eng (original)

+ char_freq file 연결

(나머지 동일)

4. Koean _aihub

'Project > OCR' 카테고리의 다른 글

| [Project] E2E_OCR Model Architecture (0) | 2022.03.01 |

|---|---|

| [CODE] SynthText - Korean ver 응용 (0) | 2022.02.15 |